Package dalex

dalex: Responsible Machine Learning in Python

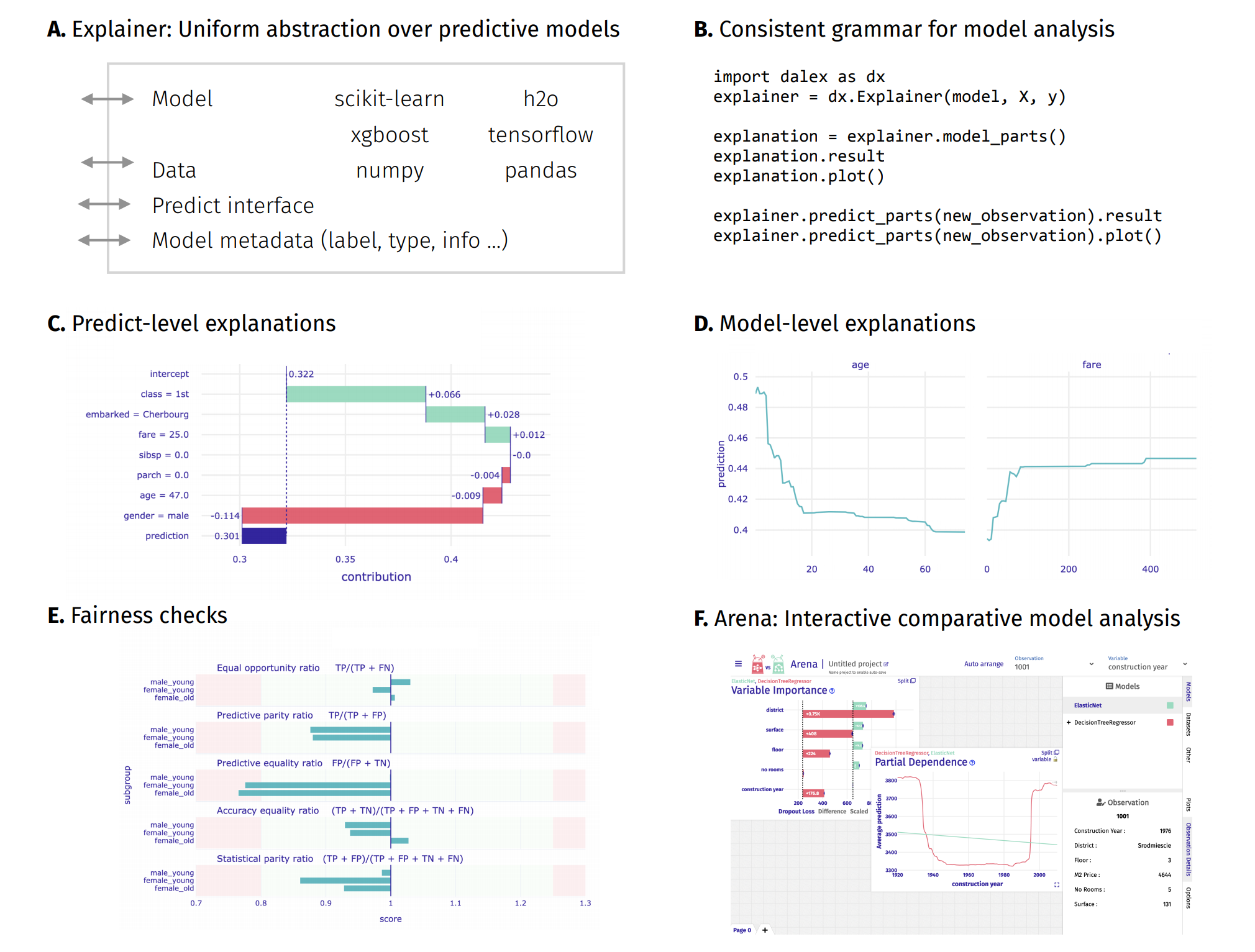

Overview

Unverified black box model is the path to the failure. Opaqueness leads to distrust. Distrust leads to ignoration. Ignoration leads to rejection.

The dalex package xrays any model and helps to explore and explain its behaviour, helps to understand how complex models are working.

The main Explainer object creates a wrapper around a predictive model. Wrapped models may then be explored and compared with a collection of model-level and predict-level explanations. Moreover, there are fairness methods and interactive exploration dashboards available to the user.

The philosophy behind dalex explanations is described in the Explanatory Model Analysis book.

Installation

The dalex package is available on PyPI and conda-forge.

pip install dalex -U

conda install -c conda-forge dalex

One can install optional dependencies for all additional features using pip install dalex[full].

Examples

- Introduction to the

dalexpackage: Titanic: tutorial and examples - Key features explained: FIFA20: explain default vs tuned model with dalex

- How to use dalex with: xgboost, tensorflow, h2o (feat. autokeras, catboost, lightgbm)

- More explanations: residuals, shap, lime

- Introduction to the Fairness module in dalex

- Tutorial on bias detection with dalex

- Introduction to the Aspect module in dalex

- Introduction to the Arena module in dalex

- Arena documentation: Getting Started & Demos

- Code in the form of jupyter notebook

Plots

This package uses plotly to render the plots:

- Install extensions to use

plotlyin JupyterLab: Getting Started Troubleshooting - Use

show=Falseparameter inplotmethod to returnplotly Figureobject - It is possible to edit the figures and save them

Citation

If you use dalex, please cite our JMLR paper:

@article{JMLR:v22:20-1473,

author = {Hubert Baniecki and

Wojciech Kretowicz and

Piotr Piatyszek and

Jakub Wisniewski and

Przemyslaw Biecek},

title = {dalex: Responsible Machine Learning

with Interactive Explainability and Fairness in Python},

journal = {Journal of Machine Learning Research},

year = {2021},

volume = {22},

number = {214},

pages = {1-7},

url = {http://jmlr.org/papers/v22/20-1473.html}

}

Developer

There is a detailed instruction on how to add native support for a new model/framework into dalex, and how to add a new explanation method.

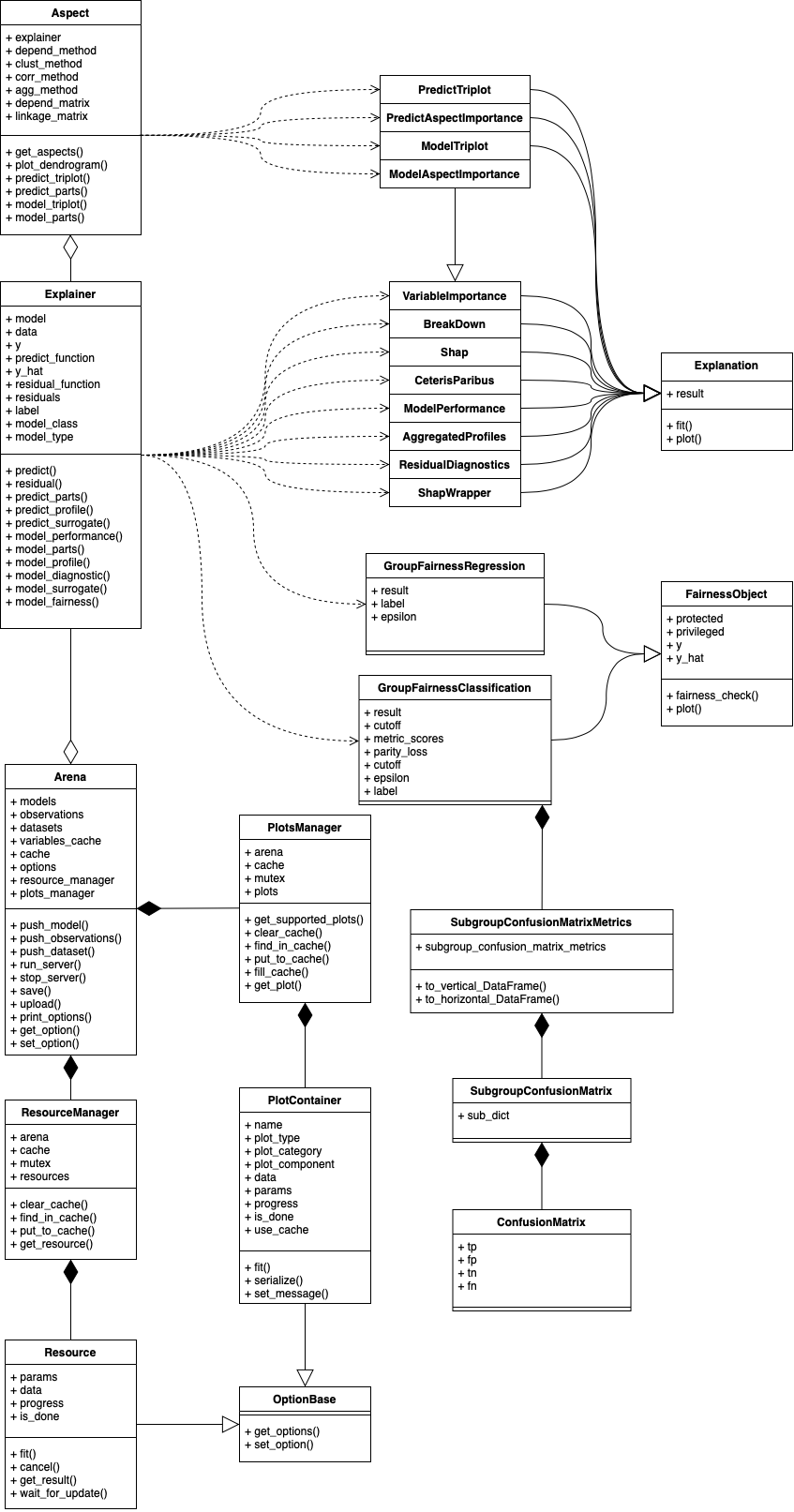

Class diagram (v1.4.0)

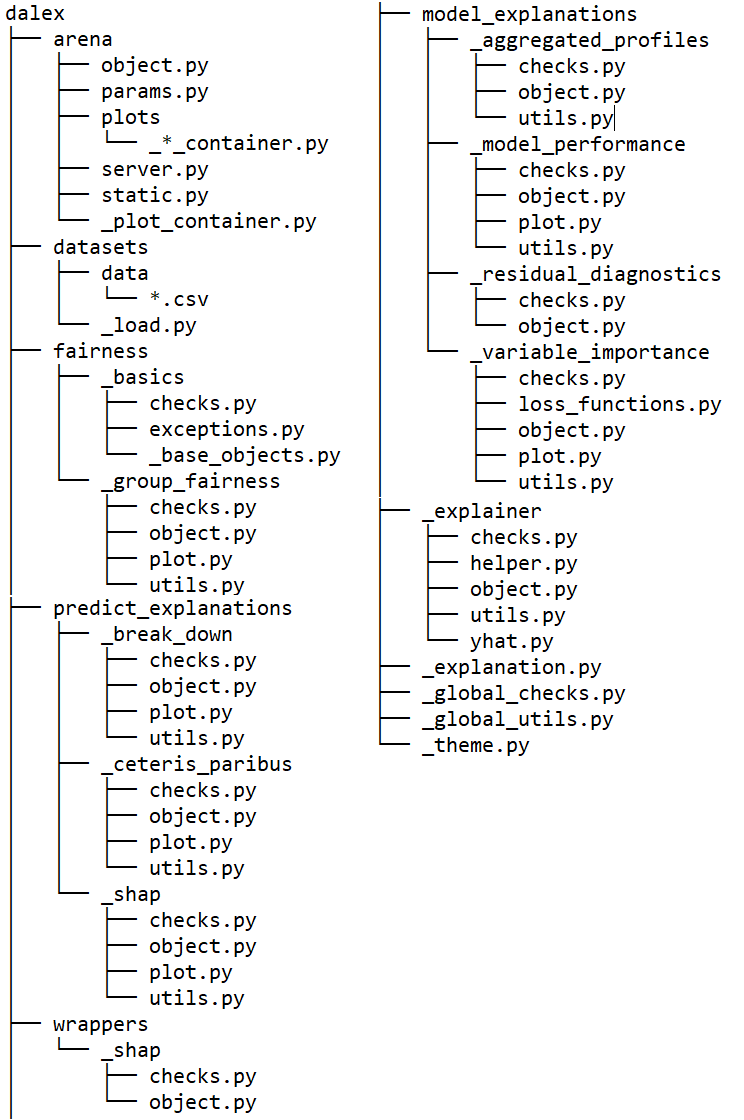

Folder structure (v1.3.0)

Expand source code Browse git

"""

.. include:: ./documentation.md

"""

from . import datasets

from ._explainer.object import Explainer

from .arena.object import Arena

from .aspect import Aspect

__version__ = '1.4.0'

__all__ = [

"Arena",

"Aspect",

"datasets",

"Explainer",

"fairness"

]

# specify autocompletion in IPython

# see comment: https://github.com/ska-telescope/katpoint/commit/ed7e8b9e389ee035073c62c2394975fe71031f88

# __dir__ docs (Python 3.7!): https://docs.python.org/3.7/library/functions.html#dir

def __dir__():

"""IPython tab completion seems to respect this."""

return __all__ + [

"__all__",

"__builtins__",

"__cached__",

"__doc__",

"__file__",

"__loader__",

"__name__",

"__package__",

"__path__",

"__spec__",

"__version__",

]Sub-modules

dalex.arenadalex.aspectdalex.datasetsdalex.fairnessdalex.model_explanationsdalex.predict_explanationsdalex.wrappers

Classes

class Arena (precalculate=False, enable_attributes=True, enable_custom_params=True, verbose=True)-

Creates Arena object

This class should be used to create Python connector for Arena dashboard. Initialized object can work both in static and live mode. Use

push_*methods to add your models, observations and datasets.Parameters

precalculate:bool- Enables precalculating plots after using each

push_*method. enable_attributes:bool- Enables attributes of observations and variables. Attributes are required to display details of observation in Arena, but it also increases generated file size.

enable_custom_params:bool- Enables modififying observations in dashboard. It requires attributes and works only in live version.

verbose:bool- Enables printing progresss of computations

Attributes

models:listofModelParam objects- List of pushed models encapsulated in ModelParam class

observations:listofObservationParam objects- List of pushed observations encapsulated in ObservationParam class

datasets:listofDatasetParam objects- List of pushed datasets encapsulated in DatasetParam class

variables_cache:listofVariableParam objects- Cached list of VariableParam objects generated using pushed models and datasets

server_thread:threading.Thread- Thread of running server or None otherwise

precalculate:bool- if plots should be precalculated

enable_attributes:bool- if attributes are enabled

enable_custom_params:bool- if modifying observations is enabled

timestamp:float- timestamp of last modification

mutex:_thread.lock- Mutex for params, plots and resources cache. Common to Arena, PlotsManager and ResourcesManager class.

options:dict- Options for plots

resource_manager:ResourceManager- Object responsible for managing resources

plots_manager:PlotsManager- Object responsible for managing plots

verbose:bool- If progress of computations should be displayed

Notes

For tutorial look at https://arena.drwhy.ai/docs/guide/first-datasource

Expand source code Browse git

class Arena: """ Creates Arena object This class should be used to create Python connector for Arena dashboard. Initialized object can work both in static and live mode. Use `push_*` methods to add your models, observations and datasets. Parameters ---------- precalculate : bool Enables precalculating plots after using each `push_*` method. enable_attributes : bool Enables attributes of observations and variables. Attributes are required to display details of observation in Arena, but it also increases generated file size. enable_custom_params : bool Enables modififying observations in dashboard. It requires attributes and works only in live version. verbose : bool Enables printing progresss of computations Attributes -------- models : list of ModelParam objects List of pushed models encapsulated in ModelParam class observations : list of ObservationParam objects List of pushed observations encapsulated in ObservationParam class datasets : list of DatasetParam objects List of pushed datasets encapsulated in DatasetParam class variables_cache : list of VariableParam objects Cached list of VariableParam objects generated using pushed models and datasets server_thread : threading.Thread Thread of running server or None otherwise precalculate : bool if plots should be precalculated enable_attributes : bool if attributes are enabled enable_custom_params : bool if modifying observations is enabled timestamp : float timestamp of last modification mutex : _thread.lock Mutex for params, plots and resources cache. Common to Arena, PlotsManager and ResourcesManager class. options : dict Options for plots resource_manager: ResourceManager Object responsible for managing resources plots_manager: PlotsManager Object responsible for managing plots verbose : bool If progress of computations should be displayed Notes -------- For tutorial look at https://arena.drwhy.ai/docs/guide/first-datasource """ def __init__(self, precalculate=False, enable_attributes=True, enable_custom_params=True, verbose=True): self.mutex = threading.Lock() self.models = [] self.observations = [] self.datasets = [] self.variables_cache = [] self.resource_manager = ResourceManager(self) self.plots_manager = PlotsManager(self) self.server_thread = None self.precalculate = bool(precalculate) self.enable_attributes = bool(enable_attributes) self.enable_custom_params = bool(enable_custom_params) self.verbose = bool(verbose) self.timestamp = datetime.timestamp(datetime.now()) self.options = {} for x in (self.plots_manager.plots + self.resource_manager.resources): options = self.options.get(x.options_category) or {} for o in x.options.keys(): options[o] = {'value': x.options.get(o).get('default'), 'desc': x.options.get(o).get('desc')} self.options[x.options_category] = options def get_supported_plots(self): """Returns plots classes that can produce at least one valid chart for this Arena. Returns ----------- List of classes extending PlotContainer """ return self.plots_manager.get_supported_plots() def run_server(self, host='127.0.0.1', port=8181, append_data=False, arena_url='https://arena.drwhy.ai/', disable_logs=True): """Starts server for live mode of Arena Parameters ----------- host : str ip or hostname for the server port : int port number for the server append_data : bool if generated link should append data to already existing Arena window. arena_url : str URl of Arena dhasboard disable_logs : str if logs should be muted Notes -------- Read more about data sources https://arena.drwhy.ai/docs/guide/basic-concepts Returns ----------- Link to Arena """ if self.server_thread: raise Exception('Server is already running. To stop ip use arena.stop_server().') global_check_import('flask') global_check_import('flask_cors') global_check_import('requests') self.server_thread = threading.Thread(target=start_server, args=(self, host, port, disable_logs)) self.server_thread.start() if append_data: print(arena_url + '?append=http://' + host + ':' + str(port) + '/') else: print(arena_url + '?data=http://' + host + ':' + str(port) + '/') def stop_server(self): """Stops running server""" if not self.server_thread: raise Exception('Server is not running') self._stop_server() self.server_thread.join() self.server_thread = None def update_timestamp(self): """Updates timestamp Notes ------- This function must be called from mutex context """ now = datetime.now() self.timestamp = datetime.timestamp(now) def push_model(self, explainer, precalculate=None): """Adds model to Arena This method encapsulate explainer in ModelParam object and save appends models fields. When precalculation is enabled triggers filling cache. Parameters ----------- explainer : dalex.Explainer Explainer created using dalex package precalculate : bool or None Overrides constructor `precalculate` parameter when it is not None. If true, then only plots using this model will be precalculated. """ if not isinstance(explainer, Explainer): raise Exception('Invalid Explainer argument') if explainer.label in self.list_params('model'): raise Exception('Explainer with the same label was already added') precalculate = self.precalculate if precalculate is None else bool(precalculate) param = ModelParam(explainer) with self.mutex: self.update_timestamp() self.models.append(param) self.variables_cache = [] if precalculate: self.plots_manager.fill_cache({'model': param}) def push_observations(self, observations, precalculate=None): """Adds observations to Arena Pushed observations will be used to local explainations. Function creates ObservationParam object for each row of pushed dataset. Label for each observation is taken from row name. When precalculation is enabled triggers filling cache. Parameters ----------- observations : pandas.DataFrame Data frame of observations to be explained using instance level plots. Label for each observation is taken from row name. precalculate : bool or None Overrides constructor `precalculate` parameter when it is not None. If true, then only plots using thease observations will be precalculated. """ if not isinstance(observations, DataFrame): raise Exception('Observations argument is not a pandas DataFrame') if len(observations.index.names) != 1: raise Exception('Observations argument need to have only one index') if not observations.index.is_unique: raise Exception('Observations argument need to have unique indexes') precalculate = self.precalculate if precalculate is None else bool(precalculate) old_observations = self.list_params('observation') observations = observations.set_index(observations.index.astype(str)) params_objects = [] for x in observations.index: if x in old_observations: raise Exception('Indexes of observations need to be unique across all observations') params_objects.append(ObservationParam(dataset=observations, index=x)) with self.mutex: self.update_timestamp() self.observations.extend(params_objects) if precalculate: for obs in params_objects: self.plots_manager.fill_cache({'observation': obs}) def push_dataset(self, dataset, target, label, precalculate=None): """Adds dataset to Arena Pushed dataset will visualised using exploratory data analysis plots. Function creates DatasetParam object with specified label and target name. When precalculation is enabled triggers filling cache. Parameters ----------- dataset : pandas.DataFrame Data frame to be visualised using EDA plots. This dataset should contain target variable. target : str Name of target column label : str Label for this dataset precalculate : bool or None Overrides constructor `precalculate` parameter when it is not None. If true, then only plots using this model will be precalculated. """ if not isinstance(dataset, DataFrame): raise Exception('Dataset argument is not a pandas DataFrame') if len(dataset.columns.names) != 1: raise Exception('Dataset argument need to have only one level column names') precalculate = self.precalculate if precalculate is None else bool(precalculate) target = str(target) if target not in dataset.columns: raise Exception('Target is not a column from dataset') if (not isinstance(label, str)) or (len(label) == 0): raise Exception('Label need to be at least one letter') if label in self.list_params('dataset'): raise Exception('Labels need to be unique') param = DatasetParam(dataset=dataset, label=label, target=target) with self.mutex: self.update_timestamp() self.datasets.append(param) self.variables_cache = [] if precalculate: self.plots_manager.fill_cache({'dataset': param}) def get_params(self, param_type): """Returns list of available params Parameters ----------- param_type : str One of ['model', 'variable', 'observation', 'dataset']. Params of this type will be returned Notes -------- Information about params https://arena.drwhy.ai/docs/guide/params Returns -------- List of Param objects """ if param_type == 'observation': with self.mutex: return self.observations elif param_type == 'variable': with self.mutex: if not self.variables_cache: # Extract column names from every dataset in self.dataset list and flatten it result_datasets = [col for dataset in self.datasets for col in dataset.variables] # Extract column names from every model in self.models list and flatten it result_explainers = [col for model in self.models for col in model.variables] result_str = np.unique(result_datasets + result_explainers).tolist() self.variables_cache = [VariableParam(x) for x in result_str] if self.enable_attributes: for var in self.variables_cache: try: for dataset in self.datasets: if var.variable in dataset.variables: var.update_attributes(dataset.dataset[var.variable]) for model in self.models: if var.variable in model.variables: var.update_attributes(model.explainer.data[var.variable]) except: var.clear_attributes() return self.variables_cache elif param_type == 'model': with self.mutex: return self.models elif param_type == 'dataset': with self.mutex: return self.datasets else: raise Exception('Invalid param type') def list_params(self, param_type): """Returns list of available params's labels Parameters ----------- param_type : str One of ['model', 'variable', 'observation', 'dataset']. Labels of params of this type will be returned Notes -------- Information about params https://arena.drwhy.ai/docs/guide/params Returns -------- List of str """ return [x.get_label() for x in self.get_params(param_type)] def get_available_params(self): """Returns dict containing available params of all types This method collect result of `get_params` method for each param type into a dict. Keys are param types and values are lists of Param objects. Notes -------- Information about params https://arena.drwhy.ai/docs/guide/params Returns -------- dict """ result = {} for param_type in ['model', 'observation', 'variable', 'dataset']: result[param_type] = self.get_params(param_type) return result def list_available_params(self): """Returns dict containing labels of available params of all types This methods collect result of `list_params` for each param type into a dict. Keys are param types and values are list of labels. Notes -------- Information about params https://arena.drwhy.ai/docs/guide/params Returns -------- dict """ result = {} for param_type in ['model', 'observation', 'variable', 'dataset']: result[param_type] = self.list_params(param_type) return result def find_param_value(self, param_type, param_label): """Searches for Param object with specified label Parameters ----------- param_type : str One of ['model', 'variable', 'observation', 'dataset']. param_label : str Label of searched param Notes -------- Information about params https://arena.drwhy.ai/docs/guide/params Returns -------- Param or None """ if param_label is None or not isinstance(param_label, str): return None return next((x for x in self.get_params(param_type) if x.get_label() == param_label), None) def print_options(self, options_category=None): """Prints available options for plots Parameters ----------- options_category : str or None When not None, then only options for plots or resources with this category will be printed. Notes -------- List of plots with described options for each one https://arena.drwhy.ai/docs/guide/observation-level """ options = self.options.get(options_category) if options is None: for category in self.options.keys(): self.print_options(category) return if len(options.keys()) == 0: return print('\n\033[1m' + options_category + '\033[0m') print('---------------------------------') for option_name in options.keys(): value = options.get(option_name).get('value') print(option_name + ': ' + str(value) + ' #' + options.get(option_name).get('desc')) def get_option(self, options_category, option): """Returns value of specified option Parameters ----------- options_category : str Category of option. In most cases category is coresponds to one plot_type. Categories are underlined in the output of arena.print_options() option : str Name of the option Notes -------- List of plots with described options for each one https://arena.drwhy.ai/docs/guide/observation-level Returns -------- None or value of option """ options = self.options.get(options_category) if options is None: raise Exception('Invalid options category') if option not in options.keys(): return with self.mutex: return self.options.get(options_category).get(option).get('value') def set_option(self, options_category, option, value): """Sets value for the plot option Parameters ----------- options_category : str or None When None, then value will be set for each plot and resource having option with name equal to `option` argument. Otherwise only for plots and resources with specified options_category. In most cases category is coresponds to one plot_type. Categories are underlined in the output of arena.print_options() option : str Name of the option value : * Value to be set Notes -------- List of plots with described options for each one https://arena.drwhy.ai/docs/guide/observation-level """ if options_category is None: for category in self.options.keys(): self.set_option(category, option, value) return options = self.options.get(options_category) if options is None: raise Exception('Invalid options category') if option not in options.keys(): return with self.mutex: self.options[options_category][option]['value'] = value for plot_type in np.unique([x.info.get('plotType') for x in self.plots_manager.plots if x.options_category == options_category]): self.plots_manager.clear_cache(plot_type) for resource_type in np.unique([x.resource_type for x in self.resource_manager.resources if x.options_category == options_category]): self.resource_manager.clear_cache(resource_type) if self.precalculate: self.plots_manager.fill_cache() def get_params_attributes(self, param_type=None): """Returns attributes for all params When `param_type` is not None, then function returns list of dicts. Each dict represents one of available attribute for specified param type. Field `name` is attribute name and field `values` is mapped list of available params to list of value of attribute. When `param_type` is None, then function returns dict with keys for each param type and values are lists described above. Parameters ----------- param_type : str or None One of ['model', 'variable', 'observation', 'dataset'] or None. Specifies attributes of which params should be returned. Notes -------- Attribused are used for dynamicly modifying observations https://arena.drwhy.ai/docs/guide/modifying-observations Returns -------- dict or list """ if param_type is None: obj = {} for p in ['model', 'observation', 'variable', 'dataset']: obj[p] = self.get_params_attributes(p) return obj if not self.enable_attributes: return [] attrs = Param.get_param_class(param_type).list_attributes(self) array = [] for attr in attrs: array.append({ 'name': attr, 'values': [param.get_attributes().get(attr) for param in self.get_params(param_type)] }) return array def get_param_attributes(self, param_type, param_label): """Returns attributes for one param Function searches for param with specified type and label and returns it's attributes. Parameters ----------- param_type : str One of ['model', 'variable', 'observation', 'dataset']. param_label : str Label of param Notes -------- Attribused are used for dynamicly modifying observations https://arena.drwhy.ai/docs/guide/modifying-observations Returns -------- dict """ if not self.enable_attributes: return {} param_value = self.find_param_value(param_type=param_type, param_label=param_label) if param_value: return param_value.get_attributes() else: return {} def save(self, filename="datasource.json"): """Generate all plots and saves them to JSON file Function generates only not cached plots. Parameters ----------- filename : str Path or filename to output file Notes -------- Read more about data sources https://arena.drwhy.ai/docs/guide/basic-concepts Returns -------- None """ with open(filename, 'w') as file: file.write(get_json(self)) def upload(self, token=None, arena_url='https://arena.drwhy.ai/', open_browser=True): """Generate all plots and uploads them to GitHub Gist Function generates only not cached plots. If token is not provided then function uses OAuth to open GitHub authorization page. Parameters ----------- token : str or None GitHub personal access token. If token is None, then OAuth is used. arena_url : str Address of Arena dashboard instance open_browser : bool Whether to open Arena after upload. Notes -------- Read more about data sources https://arena.drwhy.ai/docs/guide/basic-concepts Returns -------- Link to the Arena """ global_check_import('requests') if token is None: global_check_import('flask') global_check_import('flask_cors') token = generate_token() data_url = upload_arena(self, token) url = arena_url + '?data=' + data_url if open_browser: webbrowser.open(url) return urlMethods

def find_param_value(self, param_type, param_label)-

Searches for Param object with specified label

Parameters

param_type:str- One of ['model', 'variable', 'observation', 'dataset'].

param_label:str- Label of searched param

Notes

Information about params https://arena.drwhy.ai/docs/guide/params

Returns

ParamorNone

Expand source code Browse git

def find_param_value(self, param_type, param_label): """Searches for Param object with specified label Parameters ----------- param_type : str One of ['model', 'variable', 'observation', 'dataset']. param_label : str Label of searched param Notes -------- Information about params https://arena.drwhy.ai/docs/guide/params Returns -------- Param or None """ if param_label is None or not isinstance(param_label, str): return None return next((x for x in self.get_params(param_type) if x.get_label() == param_label), None) def get_available_params(self)-

Returns dict containing available params of all types

This method collect result of

get_paramsmethod for each param type into a dict. Keys are param types and values are lists of Param objects.Notes

Information about params https://arena.drwhy.ai/docs/guide/params

Returns

dict

Expand source code Browse git

def get_available_params(self): """Returns dict containing available params of all types This method collect result of `get_params` method for each param type into a dict. Keys are param types and values are lists of Param objects. Notes -------- Information about params https://arena.drwhy.ai/docs/guide/params Returns -------- dict """ result = {} for param_type in ['model', 'observation', 'variable', 'dataset']: result[param_type] = self.get_params(param_type) return result def get_option(self, options_category, option)-

Returns value of specified option

Parameters

options_category:str- Category of option. In most cases category is coresponds to one plot_type.

- Categories are underlined in the output of arena.print_options()

option:str- Name of the option

Notes

List of plots with described options for each one https://arena.drwhy.ai/docs/guide/observation-level

Returns

Noneorvalueofoption

Expand source code Browse git

def get_option(self, options_category, option): """Returns value of specified option Parameters ----------- options_category : str Category of option. In most cases category is coresponds to one plot_type. Categories are underlined in the output of arena.print_options() option : str Name of the option Notes -------- List of plots with described options for each one https://arena.drwhy.ai/docs/guide/observation-level Returns -------- None or value of option """ options = self.options.get(options_category) if options is None: raise Exception('Invalid options category') if option not in options.keys(): return with self.mutex: return self.options.get(options_category).get(option).get('value') def get_param_attributes(self, param_type, param_label)-

Returns attributes for one param

Function searches for param with specified type and label and returns it's attributes.

Parameters

param_type:str- One of ['model', 'variable', 'observation', 'dataset'].

param_label:str- Label of param

Notes

Attribused are used for dynamicly modifying observations https://arena.drwhy.ai/docs/guide/modifying-observations

Returns

dict

Expand source code Browse git

def get_param_attributes(self, param_type, param_label): """Returns attributes for one param Function searches for param with specified type and label and returns it's attributes. Parameters ----------- param_type : str One of ['model', 'variable', 'observation', 'dataset']. param_label : str Label of param Notes -------- Attribused are used for dynamicly modifying observations https://arena.drwhy.ai/docs/guide/modifying-observations Returns -------- dict """ if not self.enable_attributes: return {} param_value = self.find_param_value(param_type=param_type, param_label=param_label) if param_value: return param_value.get_attributes() else: return {} def get_params(self, param_type)-

Returns list of available params

Parameters

param_type:str- One of ['model', 'variable', 'observation', 'dataset']. Params of this type will be returned

Notes

Information about params https://arena.drwhy.ai/docs/guide/params

Returns

ListofParam objects

Expand source code Browse git

def get_params(self, param_type): """Returns list of available params Parameters ----------- param_type : str One of ['model', 'variable', 'observation', 'dataset']. Params of this type will be returned Notes -------- Information about params https://arena.drwhy.ai/docs/guide/params Returns -------- List of Param objects """ if param_type == 'observation': with self.mutex: return self.observations elif param_type == 'variable': with self.mutex: if not self.variables_cache: # Extract column names from every dataset in self.dataset list and flatten it result_datasets = [col for dataset in self.datasets for col in dataset.variables] # Extract column names from every model in self.models list and flatten it result_explainers = [col for model in self.models for col in model.variables] result_str = np.unique(result_datasets + result_explainers).tolist() self.variables_cache = [VariableParam(x) for x in result_str] if self.enable_attributes: for var in self.variables_cache: try: for dataset in self.datasets: if var.variable in dataset.variables: var.update_attributes(dataset.dataset[var.variable]) for model in self.models: if var.variable in model.variables: var.update_attributes(model.explainer.data[var.variable]) except: var.clear_attributes() return self.variables_cache elif param_type == 'model': with self.mutex: return self.models elif param_type == 'dataset': with self.mutex: return self.datasets else: raise Exception('Invalid param type') def get_params_attributes(self, param_type=None)-

Returns attributes for all params

When

param_typeis not None, then function returns list of dicts. Each dict represents one of available attribute for specified param type. Fieldnameis attribute name and fieldvaluesis mapped list of available params to list of value of attribute. Whenparam_typeis None, then function returns dict with keys for each param type and values are lists described above.Parameters

param_type:strorNone- One of ['model', 'variable', 'observation', 'dataset'] or None. Specifies attributes of which params should be returned.

Notes

Attribused are used for dynamicly modifying observations https://arena.drwhy.ai/docs/guide/modifying-observations

Returns

dictorlist

Expand source code Browse git

def get_params_attributes(self, param_type=None): """Returns attributes for all params When `param_type` is not None, then function returns list of dicts. Each dict represents one of available attribute for specified param type. Field `name` is attribute name and field `values` is mapped list of available params to list of value of attribute. When `param_type` is None, then function returns dict with keys for each param type and values are lists described above. Parameters ----------- param_type : str or None One of ['model', 'variable', 'observation', 'dataset'] or None. Specifies attributes of which params should be returned. Notes -------- Attribused are used for dynamicly modifying observations https://arena.drwhy.ai/docs/guide/modifying-observations Returns -------- dict or list """ if param_type is None: obj = {} for p in ['model', 'observation', 'variable', 'dataset']: obj[p] = self.get_params_attributes(p) return obj if not self.enable_attributes: return [] attrs = Param.get_param_class(param_type).list_attributes(self) array = [] for attr in attrs: array.append({ 'name': attr, 'values': [param.get_attributes().get(attr) for param in self.get_params(param_type)] }) return array def get_supported_plots(self)-

Returns plots classes that can produce at least one valid chart for this Arena.

Returns

Listofclasses extending PlotContainer

Expand source code Browse git

def get_supported_plots(self): """Returns plots classes that can produce at least one valid chart for this Arena. Returns ----------- List of classes extending PlotContainer """ return self.plots_manager.get_supported_plots() def list_available_params(self)-

Returns dict containing labels of available params of all types

This methods collect result of

list_paramsfor each param type into a dict. Keys are param types and values are list of labels.Notes

Information about params https://arena.drwhy.ai/docs/guide/params

Returns

dict

Expand source code Browse git

def list_available_params(self): """Returns dict containing labels of available params of all types This methods collect result of `list_params` for each param type into a dict. Keys are param types and values are list of labels. Notes -------- Information about params https://arena.drwhy.ai/docs/guide/params Returns -------- dict """ result = {} for param_type in ['model', 'observation', 'variable', 'dataset']: result[param_type] = self.list_params(param_type) return result def list_params(self, param_type)-

Returns list of available params's labels

Parameters

param_type:str- One of ['model', 'variable', 'observation', 'dataset']. Labels of params of this type will be returned

Notes

Information about params https://arena.drwhy.ai/docs/guide/params

Returns

Listofstr

Expand source code Browse git

def list_params(self, param_type): """Returns list of available params's labels Parameters ----------- param_type : str One of ['model', 'variable', 'observation', 'dataset']. Labels of params of this type will be returned Notes -------- Information about params https://arena.drwhy.ai/docs/guide/params Returns -------- List of str """ return [x.get_label() for x in self.get_params(param_type)] def print_options(self, options_category=None)-

Prints available options for plots

Parameters

options_category:strorNone- When not None, then only options for plots or resources with this category will be printed.

Notes

List of plots with described options for each one https://arena.drwhy.ai/docs/guide/observation-level

Expand source code Browse git

def print_options(self, options_category=None): """Prints available options for plots Parameters ----------- options_category : str or None When not None, then only options for plots or resources with this category will be printed. Notes -------- List of plots with described options for each one https://arena.drwhy.ai/docs/guide/observation-level """ options = self.options.get(options_category) if options is None: for category in self.options.keys(): self.print_options(category) return if len(options.keys()) == 0: return print('\n\033[1m' + options_category + '\033[0m') print('---------------------------------') for option_name in options.keys(): value = options.get(option_name).get('value') print(option_name + ': ' + str(value) + ' #' + options.get(option_name).get('desc')) def push_dataset(self, dataset, target, label, precalculate=None)-

Adds dataset to Arena

Pushed dataset will visualised using exploratory data analysis plots. Function creates DatasetParam object with specified label and target name. When precalculation is enabled triggers filling cache.

Parameters

dataset:pandas.DataFrame- Data frame to be visualised using EDA plots. This dataset should contain target variable.

target:str- Name of target column

label:str- Label for this dataset

precalculate:boolorNone- Overrides constructor

precalculateparameter when it is not None. If true, then only plots using this model will be precalculated.

Expand source code Browse git

def push_dataset(self, dataset, target, label, precalculate=None): """Adds dataset to Arena Pushed dataset will visualised using exploratory data analysis plots. Function creates DatasetParam object with specified label and target name. When precalculation is enabled triggers filling cache. Parameters ----------- dataset : pandas.DataFrame Data frame to be visualised using EDA plots. This dataset should contain target variable. target : str Name of target column label : str Label for this dataset precalculate : bool or None Overrides constructor `precalculate` parameter when it is not None. If true, then only plots using this model will be precalculated. """ if not isinstance(dataset, DataFrame): raise Exception('Dataset argument is not a pandas DataFrame') if len(dataset.columns.names) != 1: raise Exception('Dataset argument need to have only one level column names') precalculate = self.precalculate if precalculate is None else bool(precalculate) target = str(target) if target not in dataset.columns: raise Exception('Target is not a column from dataset') if (not isinstance(label, str)) or (len(label) == 0): raise Exception('Label need to be at least one letter') if label in self.list_params('dataset'): raise Exception('Labels need to be unique') param = DatasetParam(dataset=dataset, label=label, target=target) with self.mutex: self.update_timestamp() self.datasets.append(param) self.variables_cache = [] if precalculate: self.plots_manager.fill_cache({'dataset': param}) def push_model(self, explainer, precalculate=None)-

Adds model to Arena

This method encapsulate explainer in ModelParam object and save appends models fields. When precalculation is enabled triggers filling cache.

Parameters

explainer:Explainer- Explainer created using dalex package

precalculate:boolorNone- Overrides constructor

precalculateparameter when it is not None. If true, then only plots using this model will be precalculated.

Expand source code Browse git

def push_model(self, explainer, precalculate=None): """Adds model to Arena This method encapsulate explainer in ModelParam object and save appends models fields. When precalculation is enabled triggers filling cache. Parameters ----------- explainer : dalex.Explainer Explainer created using dalex package precalculate : bool or None Overrides constructor `precalculate` parameter when it is not None. If true, then only plots using this model will be precalculated. """ if not isinstance(explainer, Explainer): raise Exception('Invalid Explainer argument') if explainer.label in self.list_params('model'): raise Exception('Explainer with the same label was already added') precalculate = self.precalculate if precalculate is None else bool(precalculate) param = ModelParam(explainer) with self.mutex: self.update_timestamp() self.models.append(param) self.variables_cache = [] if precalculate: self.plots_manager.fill_cache({'model': param}) def push_observations(self, observations, precalculate=None)-

Adds observations to Arena

Pushed observations will be used to local explainations. Function creates ObservationParam object for each row of pushed dataset. Label for each observation is taken from row name. When precalculation is enabled triggers filling cache.

Parameters

observations:pandas.DataFrame- Data frame of observations to be explained using instance level plots. Label for each observation is taken from row name.

precalculate:boolorNone- Overrides constructor

precalculateparameter when it is not None. If true, then only plots using thease observations will be precalculated.

Expand source code Browse git

def push_observations(self, observations, precalculate=None): """Adds observations to Arena Pushed observations will be used to local explainations. Function creates ObservationParam object for each row of pushed dataset. Label for each observation is taken from row name. When precalculation is enabled triggers filling cache. Parameters ----------- observations : pandas.DataFrame Data frame of observations to be explained using instance level plots. Label for each observation is taken from row name. precalculate : bool or None Overrides constructor `precalculate` parameter when it is not None. If true, then only plots using thease observations will be precalculated. """ if not isinstance(observations, DataFrame): raise Exception('Observations argument is not a pandas DataFrame') if len(observations.index.names) != 1: raise Exception('Observations argument need to have only one index') if not observations.index.is_unique: raise Exception('Observations argument need to have unique indexes') precalculate = self.precalculate if precalculate is None else bool(precalculate) old_observations = self.list_params('observation') observations = observations.set_index(observations.index.astype(str)) params_objects = [] for x in observations.index: if x in old_observations: raise Exception('Indexes of observations need to be unique across all observations') params_objects.append(ObservationParam(dataset=observations, index=x)) with self.mutex: self.update_timestamp() self.observations.extend(params_objects) if precalculate: for obs in params_objects: self.plots_manager.fill_cache({'observation': obs}) def run_server(self, host='127.0.0.1', port=8181, append_data=False, arena_url='https://arena.drwhy.ai/', disable_logs=True)-

Starts server for live mode of Arena

Parameters

host:str- ip or hostname for the server

port:int- port number for the server

append_data:bool- if generated link should append data to already existing Arena window.

arena_url:str- URl of Arena dhasboard

disable_logs:str- if logs should be muted

Notes

Read more about data sources https://arena.drwhy.ai/docs/guide/basic-concepts

Returns

Link to Arena

Expand source code Browse git

def run_server(self, host='127.0.0.1', port=8181, append_data=False, arena_url='https://arena.drwhy.ai/', disable_logs=True): """Starts server for live mode of Arena Parameters ----------- host : str ip or hostname for the server port : int port number for the server append_data : bool if generated link should append data to already existing Arena window. arena_url : str URl of Arena dhasboard disable_logs : str if logs should be muted Notes -------- Read more about data sources https://arena.drwhy.ai/docs/guide/basic-concepts Returns ----------- Link to Arena """ if self.server_thread: raise Exception('Server is already running. To stop ip use arena.stop_server().') global_check_import('flask') global_check_import('flask_cors') global_check_import('requests') self.server_thread = threading.Thread(target=start_server, args=(self, host, port, disable_logs)) self.server_thread.start() if append_data: print(arena_url + '?append=http://' + host + ':' + str(port) + '/') else: print(arena_url + '?data=http://' + host + ':' + str(port) + '/') def save(self, filename='datasource.json')-

Generate all plots and saves them to JSON file

Function generates only not cached plots.

Parameters

filename:str- Path or filename to output file

Notes

Read more about data sources https://arena.drwhy.ai/docs/guide/basic-concepts

Returns

None

Expand source code Browse git

def save(self, filename="datasource.json"): """Generate all plots and saves them to JSON file Function generates only not cached plots. Parameters ----------- filename : str Path or filename to output file Notes -------- Read more about data sources https://arena.drwhy.ai/docs/guide/basic-concepts Returns -------- None """ with open(filename, 'w') as file: file.write(get_json(self)) def set_option(self, options_category, option, value)-

Sets value for the plot option

Parameters

options_category:strorNone- When None, then value will be set for each plot and resource

having option with name equal to

optionargument. Otherwise only for plots and resources with specified options_category. In most cases category is coresponds to one plot_type. Categories are underlined in the output of arena.print_options() option:str- Name of the option

value:*- Value to be set

Notes

List of plots with described options for each one https://arena.drwhy.ai/docs/guide/observation-level

Expand source code Browse git

def set_option(self, options_category, option, value): """Sets value for the plot option Parameters ----------- options_category : str or None When None, then value will be set for each plot and resource having option with name equal to `option` argument. Otherwise only for plots and resources with specified options_category. In most cases category is coresponds to one plot_type. Categories are underlined in the output of arena.print_options() option : str Name of the option value : * Value to be set Notes -------- List of plots with described options for each one https://arena.drwhy.ai/docs/guide/observation-level """ if options_category is None: for category in self.options.keys(): self.set_option(category, option, value) return options = self.options.get(options_category) if options is None: raise Exception('Invalid options category') if option not in options.keys(): return with self.mutex: self.options[options_category][option]['value'] = value for plot_type in np.unique([x.info.get('plotType') for x in self.plots_manager.plots if x.options_category == options_category]): self.plots_manager.clear_cache(plot_type) for resource_type in np.unique([x.resource_type for x in self.resource_manager.resources if x.options_category == options_category]): self.resource_manager.clear_cache(resource_type) if self.precalculate: self.plots_manager.fill_cache() def stop_server(self)-

Stops running server

Expand source code Browse git

def stop_server(self): """Stops running server""" if not self.server_thread: raise Exception('Server is not running') self._stop_server() self.server_thread.join() self.server_thread = None def update_timestamp(self)-

Updates timestamp

Notes

This function must be called from mutex context

Expand source code Browse git

def update_timestamp(self): """Updates timestamp Notes ------- This function must be called from mutex context """ now = datetime.now() self.timestamp = datetime.timestamp(now) def upload(self, token=None, arena_url='https://arena.drwhy.ai/', open_browser=True)-

Generate all plots and uploads them to GitHub Gist

Function generates only not cached plots. If token is not provided then function uses OAuth to open GitHub authorization page.

Parameters

token:strorNone- GitHub personal access token. If token is None, then OAuth is used.

arena_url:str- Address of Arena dashboard instance

open_browser:bool- Whether to open Arena after upload.

Notes

Read more about data sources https://arena.drwhy.ai/docs/guide/basic-concepts

Returns

Link to the Arena

Expand source code Browse git

def upload(self, token=None, arena_url='https://arena.drwhy.ai/', open_browser=True): """Generate all plots and uploads them to GitHub Gist Function generates only not cached plots. If token is not provided then function uses OAuth to open GitHub authorization page. Parameters ----------- token : str or None GitHub personal access token. If token is None, then OAuth is used. arena_url : str Address of Arena dashboard instance open_browser : bool Whether to open Arena after upload. Notes -------- Read more about data sources https://arena.drwhy.ai/docs/guide/basic-concepts Returns -------- Link to the Arena """ global_check_import('requests') if token is None: global_check_import('flask') global_check_import('flask_cors') token = generate_token() data_url = upload_arena(self, token) url = arena_url + '?data=' + data_url if open_browser: webbrowser.open(url) return url

class Aspect (explainer, depend_method='assoc', clust_method='complete', corr_method='spearman', agg_method='max')-

Create Aspect

Explanation methods that do not take into account dependencies between variables can produce misleading results. This class creates a representation of a model based on an Explainer object. In addition, it calculates the relationships between the variables that can be used to create explanations. Methods of this class produce explanation objects, that contain the main result attribute, and can be visualised using the plot method.

The

explaineris the only required parameter.Parameters

explainer:Explainer object- Model wrapper created using the Explainer class.

depend_method:{'assoc', 'pps'}orfunction, optional- The method of calculating the dependencies between variables (i.e. the dependency

matrix). Default is

'assoc', which means the use of statistical association (correlation coefficient, Cramér's V based on Pearson's chi-squared statistic and eta-quared based on Kruskal-Wallis H-statistic);'pps'stands for Power Predictive Score. NOTE: When a function is passed, it is called with theexplainer.dataand it must return a symmetric dependency matrix (pd.DataFramewith variable names as columns and rows). clust_method:{'complete', 'single', 'average', 'weighted', 'centroid', 'median', 'ward'}, optional- The linkage algorithm to use for variables hierarchical clustering

(default is

'complete'). corr_method:{'spearman', 'pearson', 'kendall'}, optional- The method of calculating correlation between numerical variables

(default is

'spearman'). NOTE: Ignored ifdepend_methodis not'assoc'. agg_method:{'max', 'min', 'avg'}, optional- The method of aggregating the PPS values for pairs of variables

(default is

'max'). NOTE: Ignored ifdepend_methodis not'pps'.

Attributes

explainer:Explainer object- Model wrapper created using the Explainer class.

depend_method:{'assoc', 'pps'}orfunction- The method of calculating the dependencies between variables.

clust_method:{'complete', 'single', 'average', 'weighted', 'centroid', 'median', 'ward'}- The linkage algorithm to use for variables hierarchical clustering.

corr_method:{'spearman', 'pearson', 'kendall'}- The method of calculating correlation between numerical variables.

agg_method:{'max', 'min', 'avg'}- The method of aggregating the PPS values for pairs of variables.

depend_matrix:pd.DataFrame- The dependency matrix (with variable names as columns and rows).

linkage_matrix : The hierarchical clustering of variables encoded as a

scipylinkage matrix.Notes

- assoc, eta-squared: http://tss.awf.poznan.pl/files/3_Trends_Vol21_2014__no1_20.pdf

- assoc, Cramér's V: http://stats.lse.ac.uk/bergsma/pdf/cramerV3.pdf

- PPS: https://github.com/8080labs/ppscore

- triplot: https://arxiv.org/abs/2104.03403

Expand source code Browse git

class Aspect: """Create Aspect Explanation methods that do not take into account dependencies between variables can produce misleading results. This class creates a representation of a model based on an Explainer object. In addition, it calculates the relationships between the variables that can be used to create explanations. Methods of this class produce explanation objects, that contain the main result attribute, and can be visualised using the plot method. The `explainer` is the only required parameter. Parameters ---------- explainer : Explainer object Model wrapper created using the Explainer class. depend_method: {'assoc', 'pps'} or function, optional The method of calculating the dependencies between variables (i.e. the dependency matrix). Default is `'assoc'`, which means the use of statistical association (correlation coefficient, Cramér's V based on Pearson's chi-squared statistic and eta-quared based on Kruskal-Wallis H-statistic); `'pps'` stands for Power Predictive Score. NOTE: When a function is passed, it is called with the `explainer.data` and it must return a symmetric dependency matrix (`pd.DataFrame` with variable names as columns and rows). clust_method : {'complete', 'single', 'average', 'weighted', 'centroid', 'median', 'ward'}, optional The linkage algorithm to use for variables hierarchical clustering (default is `'complete'`). corr_method : {'spearman', 'pearson', 'kendall'}, optional The method of calculating correlation between numerical variables (default is `'spearman'`). NOTE: Ignored if `depend_method` is not `'assoc'`. agg_method : {'max', 'min', 'avg'}, optional The method of aggregating the PPS values for pairs of variables (default is `'max'`). NOTE: Ignored if `depend_method` is not `'pps'`. Attributes -------- explainer : Explainer object Model wrapper created using the Explainer class. depend_method : {'assoc', 'pps'} or function The method of calculating the dependencies between variables. clust_method : {'complete', 'single', 'average', 'weighted', 'centroid', 'median', 'ward'} The linkage algorithm to use for variables hierarchical clustering. corr_method : {'spearman', 'pearson', 'kendall'} The method of calculating correlation between numerical variables. agg_method : {'max', 'min', 'avg'} The method of aggregating the PPS values for pairs of variables. depend_matrix : pd.DataFrame The dependency matrix (with variable names as columns and rows). linkage_matrix : The hierarchical clustering of variables encoded as a `scipy` linkage matrix. Notes ----- - assoc, eta-squared: http://tss.awf.poznan.pl/files/3_Trends_Vol21_2014__no1_20.pdf - assoc, Cramér's V: http://stats.lse.ac.uk/bergsma/pdf/cramerV3.pdf - PPS: https://github.com/8080labs/ppscore - triplot: https://arxiv.org/abs/2104.03403 """ def __init__( self, explainer, depend_method="assoc", clust_method="complete", corr_method="spearman", agg_method="max", ): _depend_method, _corr_method, _agg_method = checks.check_method_depend(depend_method, corr_method, agg_method) self.explainer = explainer self.depend_method = _depend_method self.clust_method = clust_method self.corr_method = _corr_method self.agg_method = _agg_method self.depend_matrix = utils.calculate_depend_matrix( self.explainer.data, self.depend_method, self.corr_method, self.agg_method ) self.linkage_matrix = utils.calculate_linkage_matrix( self.depend_matrix, clust_method ) self._hierarchical_clustering_dendrogram = plot.plot_dendrogram( self.linkage_matrix, self.depend_matrix.columns ) self._dendrogram_aspects_ordered = utils.get_dendrogram_aspects_ordered( self._hierarchical_clustering_dendrogram, self.depend_matrix ) self._full_hierarchical_aspect_importance = None self._mt_params = None def get_aspects(self, h=0.5, n=None): from scipy.cluster.hierarchy import fcluster """Form aspects of variables from the hierarchical clustering Parameters ---------- h : float, optional Threshold to apply when forming aspects, i.e., the minimum value of the dependency between the variables grouped in one aspect (default is `0.5`). NOTE: Ignored if `n` is not `None`. n : int, optional Maximum number of aspects to form (default is `None`, which means the use of `h` parameter). Returns ------- dict of lists Variables grouped in aspects, e.g. `{'aspect_1': ['x1', 'x2'], 'aspect_2': ['y1', 'y2']}`. """ if n is None: aspect_label = fcluster(self.linkage_matrix, 1 - h, criterion="distance") else: aspect_label = fcluster(self.linkage_matrix, n, criterion="maxclust") aspects = pd.DataFrame( {"feature": self.depend_matrix.columns, "aspect": aspect_label} ) aspects = aspects.groupby("aspect")["feature"].apply(list).reset_index() aspects_dict = {} # rename an aspect when there is a single variable in it i = 1 for index, row in aspects.iterrows(): if len(row["feature"]) > 1: aspects_dict[f"aspect_{i}"] = row["feature"] i += 1 else: aspects_dict[row["feature"][0]] = row["feature"] return aspects_dict def plot_dendrogram( self, title="Hierarchical clustering dendrogram", lines_interspace=20, rounding_function=np.round, digits=3, show=True, ): """Plot the hierarchical clustering dendrogram of variables Parameters ---------- title : str, optional Title of the plot (default is "Hierarchical clustering dendrogram"). lines_interspace : float, optional Interspace between lines of dendrogram in px (default is `20`). rounding_function : function, optional A function that will be used for rounding numbers (default is `np.around`). digits : int, optional Number of decimal places (`np.around`) to round contributions. See `rounding_function` parameter (default is `3`). show : bool, optional `True` shows the plot; `False` returns the plotly Figure object that can be edited or saved using the `write_image()` method (default is `True`). Returns ------- None or plotly.graph_objects.Figure Return figure that can be edited or saved. See `show` parameter. """ m = len(self.depend_matrix.columns) plot_height = 78 + 71 + m * lines_interspace + (m + 1) * lines_interspace / 4 fig = self._hierarchical_clustering_dendrogram fig = plot.add_text_and_tooltips_to_dendrogram( fig, self._dendrogram_aspects_ordered, rounding_function, digits ) fig = plot._add_points_on_dendrogram_traces(fig) fig.update_layout( title={"text": title, "x": 0.15}, yaxis={"automargin": True, "autorange": "reversed"}, height=plot_height, ) if show: fig.show(config=_theme.get_default_config()) else: return fig def predict_parts( self, new_observation, variable_groups=None, type="default", h=0.5, N=2000, B=25, n_aspects=None, sample_method="default", f=2, label=None, processes=1, random_state=None, ): """Calculate predict-level aspect importance Parameters ---------- new_observation : pd.Series or np.ndarray (1d) or pd.DataFrame (1,p) An observation for which a prediction needs to be explained. variable_groups : dict of lists or None Variables grouped in aspects to calculate their importance (default is `None`). type : {'default', 'shap'}, optional Type of aspect importance/attributions (default is `'default'`, which means the use of simplified LIME method). h : float, optional Threshold to apply when forming aspects, i.e., the minimum value of the dependency between the variables grouped in one aspect (default is `0.5`). N : int, optional Number of observations that will be sampled from the `explainer.data` attribute before the calculation of aspect importance (default is `2000`). B : int, optional Parameter specific for `type == 'shap'`. Number of random paths to calculate aspect attributions (default is `25`). NOTE: Ignored if `type` is not `'shap'`. n_aspects : int, optional Parameter specific for `type == 'default'`. Maximum number of non-zero importances, i.e. coefficients after lasso fitting (default is `None`, which means the linear regression is used). NOTE: Ignored if `type` is not `'default'`. sample_method : {'default', 'binom'}, optional Parameter specific for `type == 'default'`. Sampling method for creating binary matrix used as mask for replacing aspects in sampled data (default is `'default'`, which means it randomly replaces one or two zeros per row; `'binom'` replaces random number of zeros per row). NOTE: Ignored if `type` is not `'default'`. f : int, optional Parameter specific for `type == 'default'` and `sample_method == 'binom'`. Parameter controlling average number of replaced zeros for binomial sampling (default is `2`). NOTE: Ignored if `type` is not `'default'` or `sample_method` is not `'binom'`. label : str, optional Name to appear in result and plots. Overrides default. processes : int, optional Parameter specific for `type == 'shap'`. Number of parallel processes to use in calculations. Iterated over `B` (default is `1`, which means no parallel computation). random_state : int, optional Set seed for random number generator (default is random seed). Returns ------- PredictAspectImportance class object Explanation object containing the main result attribute and the plot method. """ if variable_groups is None: variable_groups = self.get_aspects(h) pai = PredictAspectImportance( variable_groups, type, N, B, n_aspects, sample_method, f, self.depend_method, self.corr_method, self.agg_method, processes, random_state, _depend_matrix=self.depend_matrix ) pai.fit(self.explainer, new_observation) if label is not None: pai.result["label"] = label return pai def model_parts( self, variable_groups=None, h=0.5, loss_function=None, type="variable_importance", N=1000, B=10, processes=1, label=None, random_state=None, ): """Calculate model-level aspect importance Parameters ---------- variable_groups : dict of lists or None Variables grouped in aspects to calculate their importance (default is `None`). h : float, optional Threshold to apply when forming aspects, i.e., the minimum value of the dependency between the variables grouped in one aspect (default is `0.5`). loss_function : {'rmse', '1-auc', 'mse', 'mae', 'mad'} or function, optional If string, then such loss function will be used to assess aspect importance (default is `'rmse'` or `'1-auc'`, depends on `explainer.model_type` attribute). type : {'variable_importance', 'ratio', 'difference'}, optional Type of transformation that will be applied to dropout loss (default is `'variable_importance'`, which is Permutational Variable Importance). N : int, optional Number of observations that will be sampled from the `explainer.data` attribute before the calculation of aspect importance. `None` means all `data` (default is `1000`). B : int, optional Number of permutation rounds to perform on each variable (default is `10`). processes : int, optional Number of parallel processes to use in calculations. Iterated over `B` (default is `1`, which means no parallel computation). label : str, optional Name to appear in result and plots. Overrides default. random_state : int, optional Set seed for random number generator (default is random seed). Returns ------- ModelAspectImportance class object Explanation object containing the main result attribute and the plot method. """ loss_function = checks.check_method_loss_function(self.explainer, loss_function) mai_result = None if variable_groups is None: variable_groups = self.get_aspects(h) # get results from triplot if it was precalculated with the same params if self._full_hierarchical_aspect_importance is not None: if ( self._mt_params["loss_function"] == loss_function and self._mt_params["N"] == N and self._mt_params["B"] == B and self._mt_params["type"] == type ): h = min(1, h) h_selected = np.unique( self._full_hierarchical_aspect_importance.loc[ self._full_hierarchical_aspect_importance.h >= h ].h )[0] mai_result = self._full_hierarchical_aspect_importance.loc[ self._full_hierarchical_aspect_importance.h == h_selected ] ai = ModelAspectImportance( loss_function=loss_function, type=type, N=N, B=B, variable_groups=variable_groups, processes=processes, random_state=random_state, _depend_matrix=self.depend_matrix ) # calculate if there was no results if mai_result is None: ai.fit(self.explainer) else: mai_result = mai_result[ [ "aspect_name", "variable_names", "dropout_loss", "dropout_loss_change", "min_depend", "vars_min_depend", "label", ] ] ai.result = mai_result if label is not None: ai.result["label"] = label return ai def predict_triplot( self, new_observation, type="default", N=2000, B=25, sample_method="default", f=2, processes=1, random_state=None, ): """Calculate predict-level hierarchical aspect importance Parameters ---------- new_observation : pd.Series or np.ndarray (1d) or pd.DataFrame (1,p) An observation for which a prediction needs to be explained. type : {'default', 'shap'}, optional Type of aspect importance/attributions (default is `'default'`, which means the use of simplified LIME method). N : int, optional Number of observations that will be sampled from the `explainer.data` attribute before the calculation of aspect importance (default is `2000`). B : int, optional Parameter specific for `type == 'shap'`. Number of random paths to calculate aspect attributions (default is `25`). NOTE: Ignored if `type` is not `'shap'`. sample_method : {'default', 'binom'}, optional Parameter specific for `type == 'default'`. Sampling method for creating binary matrix used as mask for replacing aspects in data (default is `'default'`, which means it randomly replaces one or two zeros per row; `'binom'` replaces random number of zeros per row). NOTE: Ignored if `type` is not `'default'`. f : int, optional Parameter specific for `type == 'default'` and `sample_method == 'binom'`. Parameter controlling average number of replaced zeros for binomial sampling (default is `2`). NOTE: Ignored if `type` is not `'default'` or `sample_method` is not `'binom'`. processes : int, optional Number of parallel processes to use in calculations. Iterated over `B` (default is `1`, which means no parallel computation). random_state : int, optional Set seed for random number generator (default is random seed). Returns ------- PredictTriplot class object Explanation object containing the main result attribute and the plot method. """ pt = PredictTriplot(type, N, B, sample_method, f, processes, random_state) pt.fit(self, new_observation) return pt def model_triplot( self, loss_function=None, type="variable_importance", N=1000, B=10, processes=1, random_state=None, ): """Calculate model-level hierarchical aspect importance Parameters ---------- loss_function : {'rmse', '1-auc', 'mse', 'mae', 'mad'} or function, optional If string, then such loss function will be used to assess aspect importance (default is `'rmse'` or `'1-auc'`, depends on `explainer.model_type` attribute). type : {'variable_importance', 'ratio', 'difference'}, optional Type of transformation that will be applied to dropout loss (default is `'variable_importance'`, which is Permutational Variable Importance). N : int, optional Number of observations that will be sampled from the `explainer.data` attribute before the calculation of aspect importance. `None` means all `data` (default is `1000`). B : int, optional Number of permutation rounds to perform on each variable (default is `10`). processes : int, optional Number of parallel processes to use in calculations. Iterated over `B` (default is `1`, which means no parallel computation). random_state : int, optional Set seed for random number generator (default is random seed). Returns ------- ModelTriplot class object Explanation object containing the main result attribute and the plot method. """ loss_function = checks.check_method_loss_function(self.explainer, loss_function) # get proper loss_function for model_type mt = ModelTriplot(loss_function, type, N, B, processes, random_state) self._mt_params = {"loss_function": loss_function, "type": type, "N": N, "B": B} # save params for future calls of model_parts mt.fit(self) return mtMethods

def get_aspects(self, h=0.5, n=None)-

Expand source code Browse git

def get_aspects(self, h=0.5, n=None): from scipy.cluster.hierarchy import fcluster """Form aspects of variables from the hierarchical clustering Parameters ---------- h : float, optional Threshold to apply when forming aspects, i.e., the minimum value of the dependency between the variables grouped in one aspect (default is `0.5`). NOTE: Ignored if `n` is not `None`. n : int, optional Maximum number of aspects to form (default is `None`, which means the use of `h` parameter). Returns ------- dict of lists Variables grouped in aspects, e.g. `{'aspect_1': ['x1', 'x2'], 'aspect_2': ['y1', 'y2']}`. """ if n is None: aspect_label = fcluster(self.linkage_matrix, 1 - h, criterion="distance") else: aspect_label = fcluster(self.linkage_matrix, n, criterion="maxclust") aspects = pd.DataFrame( {"feature": self.depend_matrix.columns, "aspect": aspect_label} ) aspects = aspects.groupby("aspect")["feature"].apply(list).reset_index() aspects_dict = {} # rename an aspect when there is a single variable in it i = 1 for index, row in aspects.iterrows(): if len(row["feature"]) > 1: aspects_dict[f"aspect_{i}"] = row["feature"] i += 1 else: aspects_dict[row["feature"][0]] = row["feature"] return aspects_dict def model_parts(self, variable_groups=None, h=0.5, loss_function=None, type='variable_importance', N=1000, B=10, processes=1, label=None, random_state=None)-

Calculate model-level aspect importance

Parameters

variable_groups:dictoflistsorNone- Variables grouped in aspects to calculate their importance (default is

None). h:float, optional- Threshold to apply when forming aspects, i.e., the minimum value of the dependency

between the variables grouped in one aspect (default is

0.5). loss_function:{'rmse', '1-auc', 'mse', 'mae', 'mad'}orfunction, optional- If string, then such loss function will be used to assess aspect importance

(default is

'rmse'or'1-auc', depends onexplainer.model_typeattribute). type:{'variable_importance', 'ratio', 'difference'}, optional- Type of transformation that will be applied to dropout loss

(default is

'variable_importance', which is Permutational Variable Importance). N:int, optional- Number of observations that will be sampled from the

explainer.dataattribute before the calculation of aspect importance.Nonemeans alldata(default is1000). B:int, optional- Number of permutation rounds to perform on each variable (default is

10). processes:int, optional- Number of parallel processes to use in calculations. Iterated over

B(default is1, which means no parallel computation). label:str, optional- Name to appear in result and plots. Overrides default.

random_state:int, optional- Set seed for random number generator (default is random seed).

Returns

ModelAspectImportance class object- Explanation object containing the main result attribute and the plot method.

Expand source code Browse git

def model_parts( self, variable_groups=None, h=0.5, loss_function=None, type="variable_importance", N=1000, B=10, processes=1, label=None, random_state=None, ): """Calculate model-level aspect importance Parameters ---------- variable_groups : dict of lists or None Variables grouped in aspects to calculate their importance (default is `None`). h : float, optional Threshold to apply when forming aspects, i.e., the minimum value of the dependency between the variables grouped in one aspect (default is `0.5`). loss_function : {'rmse', '1-auc', 'mse', 'mae', 'mad'} or function, optional If string, then such loss function will be used to assess aspect importance (default is `'rmse'` or `'1-auc'`, depends on `explainer.model_type` attribute). type : {'variable_importance', 'ratio', 'difference'}, optional Type of transformation that will be applied to dropout loss (default is `'variable_importance'`, which is Permutational Variable Importance). N : int, optional Number of observations that will be sampled from the `explainer.data` attribute before the calculation of aspect importance. `None` means all `data` (default is `1000`). B : int, optional Number of permutation rounds to perform on each variable (default is `10`). processes : int, optional Number of parallel processes to use in calculations. Iterated over `B` (default is `1`, which means no parallel computation). label : str, optional Name to appear in result and plots. Overrides default. random_state : int, optional Set seed for random number generator (default is random seed). Returns ------- ModelAspectImportance class object Explanation object containing the main result attribute and the plot method. """ loss_function = checks.check_method_loss_function(self.explainer, loss_function) mai_result = None if variable_groups is None: variable_groups = self.get_aspects(h) # get results from triplot if it was precalculated with the same params if self._full_hierarchical_aspect_importance is not None: if ( self._mt_params["loss_function"] == loss_function and self._mt_params["N"] == N and self._mt_params["B"] == B and self._mt_params["type"] == type ): h = min(1, h) h_selected = np.unique( self._full_hierarchical_aspect_importance.loc[ self._full_hierarchical_aspect_importance.h >= h ].h )[0] mai_result = self._full_hierarchical_aspect_importance.loc[ self._full_hierarchical_aspect_importance.h == h_selected ] ai = ModelAspectImportance( loss_function=loss_function, type=type, N=N, B=B, variable_groups=variable_groups, processes=processes, random_state=random_state, _depend_matrix=self.depend_matrix ) # calculate if there was no results if mai_result is None: ai.fit(self.explainer) else: mai_result = mai_result[ [ "aspect_name", "variable_names", "dropout_loss", "dropout_loss_change", "min_depend", "vars_min_depend", "label", ] ] ai.result = mai_result if label is not None: ai.result["label"] = label return ai def model_triplot(self, loss_function=None, type='variable_importance', N=1000, B=10, processes=1, random_state=None)-

Calculate model-level hierarchical aspect importance

Parameters

loss_function:{'rmse', '1-auc', 'mse', 'mae', 'mad'}orfunction, optional- If string, then such loss function will be used to assess aspect importance

(default is

'rmse'or'1-auc', depends onexplainer.model_typeattribute). type:{'variable_importance', 'ratio', 'difference'}, optional- Type of transformation that will be applied to dropout loss

(default is

'variable_importance', which is Permutational Variable Importance). N:int, optional- Number of observations that will be sampled from the

explainer.dataattribute before the calculation of aspect importance.Nonemeans alldata(default is1000). B:int, optional- Number of permutation rounds to perform on each variable (default is

10). processes:int, optional- Number of parallel processes to use in calculations. Iterated over

B(default is1, which means no parallel computation). random_state:int, optional- Set seed for random number generator (default is random seed).

Returns

ModelTriplot class object- Explanation object containing the main result attribute and the plot method.

Expand source code Browse git

def model_triplot( self, loss_function=None, type="variable_importance", N=1000, B=10, processes=1, random_state=None, ): """Calculate model-level hierarchical aspect importance Parameters ---------- loss_function : {'rmse', '1-auc', 'mse', 'mae', 'mad'} or function, optional If string, then such loss function will be used to assess aspect importance (default is `'rmse'` or `'1-auc'`, depends on `explainer.model_type` attribute). type : {'variable_importance', 'ratio', 'difference'}, optional Type of transformation that will be applied to dropout loss (default is `'variable_importance'`, which is Permutational Variable Importance). N : int, optional Number of observations that will be sampled from the `explainer.data` attribute before the calculation of aspect importance. `None` means all `data` (default is `1000`). B : int, optional Number of permutation rounds to perform on each variable (default is `10`). processes : int, optional Number of parallel processes to use in calculations. Iterated over `B` (default is `1`, which means no parallel computation). random_state : int, optional Set seed for random number generator (default is random seed). Returns ------- ModelTriplot class object Explanation object containing the main result attribute and the plot method. """ loss_function = checks.check_method_loss_function(self.explainer, loss_function) # get proper loss_function for model_type mt = ModelTriplot(loss_function, type, N, B, processes, random_state) self._mt_params = {"loss_function": loss_function, "type": type, "N": N, "B": B} # save params for future calls of model_parts mt.fit(self) return mt def plot_dendrogram(self, title='Hierarchical clustering dendrogram', lines_interspace=20, rounding_function=<function round_>, digits=3, show=True)-

Plot the hierarchical clustering dendrogram of variables

Parameters

title:str, optional- Title of the plot (default is "Hierarchical clustering dendrogram").

lines_interspace:float, optional- Interspace between lines of dendrogram in px (default is

20). rounding_function:function, optional- A function that will be used for rounding numbers (default is

np.around). digits:int, optional- Number of decimal places (

np.around) to round contributions. Seerounding_functionparameter (default is3). show:bool, optionalTrueshows the plot;Falsereturns the plotly Figure object that can be edited or saved using thewrite_image()method (default isTrue).

Returns

Noneorplotly.graph_objects.Figure- Return figure that can be edited or saved. See

showparameter.

Expand source code Browse git

def plot_dendrogram( self, title="Hierarchical clustering dendrogram", lines_interspace=20, rounding_function=np.round, digits=3, show=True, ): """Plot the hierarchical clustering dendrogram of variables Parameters ---------- title : str, optional Title of the plot (default is "Hierarchical clustering dendrogram"). lines_interspace : float, optional Interspace between lines of dendrogram in px (default is `20`). rounding_function : function, optional A function that will be used for rounding numbers (default is `np.around`). digits : int, optional Number of decimal places (`np.around`) to round contributions. See `rounding_function` parameter (default is `3`). show : bool, optional `True` shows the plot; `False` returns the plotly Figure object that can be edited or saved using the `write_image()` method (default is `True`). Returns ------- None or plotly.graph_objects.Figure Return figure that can be edited or saved. See `show` parameter. """ m = len(self.depend_matrix.columns) plot_height = 78 + 71 + m * lines_interspace + (m + 1) * lines_interspace / 4 fig = self._hierarchical_clustering_dendrogram fig = plot.add_text_and_tooltips_to_dendrogram( fig, self._dendrogram_aspects_ordered, rounding_function, digits ) fig = plot._add_points_on_dendrogram_traces(fig) fig.update_layout( title={"text": title, "x": 0.15}, yaxis={"automargin": True, "autorange": "reversed"}, height=plot_height, ) if show: fig.show(config=_theme.get_default_config()) else: return fig def predict_parts(self, new_observation, variable_groups=None, type='default', h=0.5, N=2000, B=25, n_aspects=None, sample_method='default', f=2, label=None, processes=1, random_state=None)-

Calculate predict-level aspect importance

Parameters

new_observation:pd.Seriesornp.ndarray (1d)orpd.DataFrame (1,p)- An observation for which a prediction needs to be explained.

variable_groups:dictoflistsorNone- Variables grouped in aspects to calculate their importance (default is

None). type:{'default', 'shap'}, optional- Type of aspect importance/attributions (default is

'default', which means the use of simplified LIME method). h:float, optional- Threshold to apply when forming aspects, i.e., the minimum value of the dependency

between the variables grouped in one aspect (default is

0.5). N:int, optional- Number of observations that will be sampled from the

explainer.dataattribute before the calculation of aspect importance (default is2000). B:int, optional- Parameter specific for

type == 'shap'. Number of random paths to calculate aspect attributions (default is25). NOTE: Ignored iftypeis not'shap'. n_aspects:int, optional- Parameter specific for

type == 'default'. Maximum number of non-zero importances, i.e. coefficients after lasso fitting (default isNone, which means the linear regression is used). NOTE: Ignored iftypeis not'default'. sample_method:{'default', 'binom'}, optional- Parameter specific for

type == 'default'. Sampling method for creating binary matrix used as mask for replacing aspects in sampled data (default is'default', which means it randomly replaces one or two zeros per row;'binom'replaces random number of zeros per row). NOTE: Ignored iftypeis not'default'. f:int, optional- Parameter specific for

type == 'default'andsample_method == 'binom'. Parameter controlling average number of replaced zeros for binomial sampling (default is2). NOTE: Ignored iftypeis not'default'orsample_methodis not'binom'. label:str, optional- Name to appear in result and plots. Overrides default.